Prequisites to learn and run the below code

I started learning pyton and opencv

These are the list of urls i followed to learn

http://docs.opencv.org/trunk/

https://www.youtube.com/

These are the list of urls i followed to learn

http://docs.opencv.org/trunk/

https://www.youtube.com/

pip

easy_install

http://scikit-learn.org/

http://scikit-image.org/docs/

and wrote the below code by using the code in the above urls

import cv2

from skimage.feature import local_binary_pattern

import numpy as np

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

eye_cascade = cv2.CascadeClassifier('rightEye.xml')

nose_cascade = cv2.CascadeClassifier('haarcascade_mcs_nose.xml')

METHOD = 'uniform'

radius = 2

n_points = 8 * radius

def hist(ax, lbp):

n_bins = lbp.max() + 1

return ax.hist(lbp.ravel(), normed=True, bins=n_bins, range=(0, n_bins),

facecolor='0.5')

def kullback_leibler_divergence(p, q):

p = np.asarray(p)

q = np.asarray(q)

filt = np.logical_and(p != 0, q != 0)

return np.sum(p[filt] * np.log2(p[filt] / q[filt]))

def match(refs, img):

best_score = 10

best_name = None

lbp = local_binary_pattern(img, n_points, radius, METHOD)

n_bins = lbp.max() + 1

hist, _ = np.histogram(lbp, normed=True, bins=n_bins, range=(0, n_bins))

for name, ref in refs.items():

ref_hist, _ = np.histogram(ref, normed=True, bins=n_bins,

range=(0, n_bins))

score = kullback_leibler_divergence(hist, ref_hist)

if score < best_score:

best_score = score

best_name = name

return best_name

brick = cv2.imread('eclosed.jpg')

brick= cv2.cvtColor(brick, cv2.COLOR_BGR2GRAY)

brick= cv2.resize(brick, (21,21),cv2.INTER_AREA)

grass = cv2.imread('eopen1.jpg')

grass = cv2.cvtColor(grass, cv2.COLOR_BGR2GRAY)

grass= cv2.resize(grass, (21,21),cv2.INTER_AREA)

wall = cv2.imread('eye2.jpg')

wall=cv2.cvtColor(wall, cv2.COLOR_BGR2GRAY)

wall= cv2.resize(wall, (21,21),cv2.INTER_AREA)

refs = {

'brick': local_binary_pattern(brick, n_points, radius, METHOD),

'grass': local_binary_pattern(grass, n_points, radius, METHOD),

'wall': local_binary_pattern(wall, n_points, radius, METHOD)

}

cap = cv2.VideoCapture('sample1.avi')

if __name__ == "__main__":

while(cap.isOpened()):

ret, img = cap.read()

#img = cv2.imread('closed.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x,y,w,h) in faces:

img = cv2.rectangle(img,(x,y),(x+w,y+h),(255,0,0),2)

roi_gray = gray[y:y+h, x:x+w]

roi_color = img[y:y+h, x:x+w]

eyes = eye_cascade.detectMultiScale(roi_gray)

for (ex,ey,ew,eh) in eyes:

cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

#le = roi_color[eyes[0,1]:eyes[0,1]+eyes[0,3], eyes[0,0]:eyes[0,0]+eyes[0,2]]

re = roi_color[eyes[1,1]:eyes[1,1]+eyes[1,3], eyes[1,0]:eyes[1,0]+eyes[1,2]]

#le=cv2.cvtColor(le, cv2.COLOR_BGR2GRAY)

re=cv2.cvtColor(re, cv2.COLOR_BGR2GRAY)

re= cv2.resize(re, (21,21),cv2.INTER_AREA)

#lstate= match(refs, le)

rstate= match(refs, re)

if rstate == 'brick':

cv2.putText(img,'closed',(10,90),cv2.FONT_HERSHEY_SIMPLEX,1,(255,0,255),2,cv2.LINE_AA)

## elif lstate == 'brick' or rstate == 'brick':

## cv2.putText(img,'closed/open',(900,900),cv2.FONT_HERSHEY_SIMPLEX,1,(255,0,255),2,cv2.LINE_AA)

else:

cv2.putText(img,'open',(10,90),cv2.FONT_HERSHEY_SIMPLEX,1,(255,0,255),2,cv2.LINE_AA)

cv2.imshow('frame',img)

k= cv2.waitKey(1)

if k == 32:#when u press spacebar

cap.release()

cv2.destroyAllWindows()

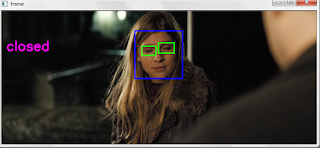

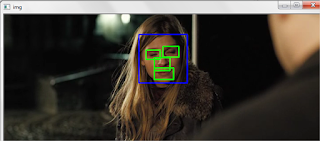

Output:

Algorithm used:

Extracting Eye Module

Input: Video /Camera

Method:

For each frame do

1. Detect the faces using Viola & Jones face detection

algorithm

2. Crop the face

3. Detect the eyes in the cropped face using Viola & Jones

eye detection algorithm

for each eye_bounding_box returned

find the pair of eye_bounding_boxes that are

approximately equal in position in y-direction

4. Crop the right eye

Output: eye_image

Eye State Detection Module

Input:

open and closed eye training images and test eye image

Method:

1. Measure a collection of LBPs for each eye image in training and test data

2.Using the histogram (equal-width

bins)of LBP collections of each eye image in training

and test images, calculate the value of the normalized probability density function at the bin.

3. Calculate the distance between each training image's probability

distribution with the probability

distributions of test image's using

Kullback-Leibler Divergence.

4.Output the one with least distance.

Output:

State of the eye whether ‘closed’ or ‘open’